About multi-tenancy on Tofino hardware

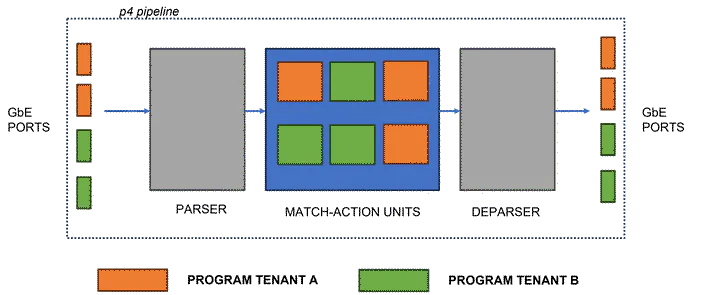

Given the multi-tenancy nature of the cluster, isolation mechanisms are essential to prevent tenants from interfering with each other and to ensure the ability to run concurrent experiments. Differently from general-purpose compute infrastructure, existing programmable data-plane technologies lack the essential support for multi-tenancy. This is also the case for the Intel Tofino products line.

These switches are equipped with multiple packet processing pipelines that can be programmed individually, thus in principle enabling some form of parallel program execution. However, they do not implement any mechanism for fault, resource and performance isolation across programs of different tenants, which can interfere with each other, either by accident or maliciously. As a result, every time a P4 program is installed, all pipelines undergo a complete reconfiguration, potentially causing disruption to the operations of other tenants’ programs.

Do we have a way to enable concurrent program execution while avoiding interference among tenants?

Virtualizing Hardware Resources for Multi-Tenancy

To enable concurrent program execution while avoiding interference among tenants, there are several approaches that can be considered:

- Compile-Time Merging: This approach involves statically combining and compiling the P4 programs of different tenants into a single P4 binary. This merged binary is then installed on the target switch. On this line of work we find P4Visor1. However, the compiling phase and pipeline re-configuration before runtime may require active tedious coordination cycles across tenants.

- Hypervisor-Like P4 Programs: Solutions like HyPer42 instead use a hypervisor-like P4 program to dynamically emulate other P4 programs. This approach provides the illusion of multiple data plane programs but incurs significant overheads, limiting its applicability to software and FPGA targets.

- Reconfigurable Match-Action (RMT) Hardware Extensions: More recent work, such as Menshen3 propose extensions to the RMT hardware architecture for data-plane multi-tenancy. Menshen adds an indirection layer using small tables to dynamically load different per-tenant configurations for the same RMT resource. While these solutions allow running per-tenant packet processing logic, they are not specifically designed for Tofino hardware.

- State-of-the-art transparent virtualization: SwitchVM4 introduces a runtime interpreter that allows the execution of Data Plane Filters (DPFs) in a P4 sandbox environment. SwitchVM does not require pipeline reconfiguration when installing new DPFs, as it involves installing match-action rules that redirect to a fixed set of executable instructions. Similarly to eBPF programs, DPFs are short programs which can be pre-installed on a PISA-like device and whose execution is triggered by arriving packets. In other words, installing new DPFs consists in installing match-action rules that redirect to a fixed set of executable instructions (e.g.,

ADD,MOV, etc.) implemented as atomic P4 actions, thus not involving any pipeline reconfiguration. Thus, SwitchVM enables time-sharing of the pipeline hardware across multiple tenants. Moreover, it guarantees strict resource isolation between DPFs belonging to different tenants, thanks to virtual registers with load/store instructions to access per-tenant state. It enables time-sharing of the pipeline hardware across multiple tenants and guarantees strict resource isolation between DPFs belonging to different tenants. It effectively achieves transparent virtualization from a hardware standpoint, however tenants must write code using a new instruction set, and not all P4 constructs are reflected into the DPF instruction set. Ultimately, SwitchVM has been implemented on a Tofino target and thus it is an interesting approach to consider for SUPERNET.

These approaches provide ways to virtualize hardware resources and enable concurrent program execution while ensuring isolation among tenants in a multi-tenancy environment. However, not all of them have been tested on Tofino switches, or require some extra effort to the P4 developer.

Initially, we opted for a static resource reservation system which grants exclusive access to the switch to one user at a time, providing isolation but not concurrency on the hardware.

P. Zheng, T. Benson, and C. Hu, “P4visor: lightweight virtualization and composition primitives for building and testing modular programs,” in Proceedings of the 14th International Conference on Emerging Networking EXperiments and Technologies, Association for Computing Machinery, 2018. ↩︎

D. Hancock and J. van der Merwe, “HyPer4: Using P4 to virtualize the programmable dataplane,” in Proceedings of the 12th International on Conference on Emerging Networking EXperiments and Technologies, Association for Computing Machinery, 2016 ↩︎

T. Wang, X. Yang, G. Antichi, A. Sivaraman, and A. Panda, “Isolation mechanisms for High-Speed Packet-Processing pipelines,” in 19th USENIX Symposium on Networked Systems Designand Implementation (NSDI 22). USENIX Association, 2022. ↩︎

S. Khashab, A. Rashelbach, and M. Silberstein, “Multitenant In-Network acceleration with SwitchVM,” in 21st USENIX Symposium on Networked Systems Design and Implementation (NSDI 24). USENIX Association, 2024. ↩︎